Showing posts with label dwell time. Show all posts

Showing posts with label dwell time. Show all posts

Friday, July 8, 2011

Gliding and Saccadic Gaze Gesture Recognition in Real Time (Rozado, 2011)

David Rozado with the Department of Neural Computation at the Universidad Autonoma de Madrid have developed a neural network approach for detecting gaze gestures in real time. I met David at ITU Copenhagen last summer when he was visiting and discussed this research, I'm happy to see that it came out with such great results. This research was part of Davids Ph.D thesis which focused on Hierarchical Temporal Memory (HTM) neural network which is a bioinspired pattern recognition algorithm. Using a low cost webcam and the ITU Gaze Tracker he is able to recognize ten different gestures with 90% accuracy using raw data. When a fixation detection algorithm and dwell time triggers are employed it is possible to achieve 100% detection rates (at the expense of longer activation times).

0

comments

Labels:

dwell time,

gazetracker,

hci

Monday, June 29, 2009

Video from COGAIN2009

John Paulin Hansen has posted a video showing some highlights from the annual COGAIN conference. It demonstrates three available gaze interaction solutions, the COGAIN GazeTalk interface, Tobii Technologies MyTobii and Alea Technologies IG-30. These interfaces relies on dwell-activated on-screen keyboards ( i.e. same procedure as last year).

0

comments

Labels:

assistive technology,

cogain,

dwell time,

gazetalk

Monday, November 17, 2008

Wearable Augmented Reality System using Gaze Interaction (Park et al., 2008)

Hyung Min Park, Seok Han Lee and Jong Soo Choi from the Graduate School of Advanced Imaging Science, Multimedia & Film at the University of Chung-Ang, Korea presented a paper on their Wearable Augmented Reality System (WARS) at the 7th IEEE/ACM International Symposium on Mixed and Augmented Reality. They use a half-blink mode (called "aging") for selection which is detected by their custom eye tracking algorithms. See the end of the video.

Undisturbed interaction is essential to provide immersive AR environments. There have been a lot of approaches to interact with VEs (virtual environments) so far, especially in hand metaphor. When the user‟s hands are being used for hand-based work such as maintenance and repair, necessity of alternative interaction technique has arisen. In recent research, hands-free gaze information is adopted to AR to perform original actions in concurrence with interaction. [3, 4]. There has been little progress on that research, still at a pilot study in a laboratory setting. In this paper, we introduce such a simple WARS(wearable augmented reality system) equipped with an HMD, scene camera, eye tracker. We propose „Aging‟ technique improving traditional dwell-time selection, demonstrate AR gallery – dynamic exhibition space with wearable system.

Download paper as PDF.Tuesday, November 11, 2008

Gaze vs. Mouse in Games: The Effects on User Experience (Gowases T, Bednarik R, Tukiainen M)

Tersia Gowases, Roman Bednarik (blog) and Markku Tukiainen at the Department of Computer Science and Statistics, University of Joensuu, Finland got a paper published in the proceedings for the 16th International Conference on Computers in Education (ICCE).

"We did a simple questionnaire-based analysis. The results of the analysis show some promises for implementing gaze-augmented problem-solving interfaces. Users of gaze-augmented interaction felt more immersed than the users of other two modes - dwell-time based and computer mouse. Immersion, engagement, and user-experience in general are important aspects in educational interfaces; learners engage in completing the tasks and, for example, when facing a difficult task they do not give up that easily. We also did analysis of the strategies, and we will report on those soon. We could not attend the conference, but didn’t want to disappoint eventual audience. We thus decided to send a video instead of us. " (from Romans blog)

"We did a simple questionnaire-based analysis. The results of the analysis show some promises for implementing gaze-augmented problem-solving interfaces. Users of gaze-augmented interaction felt more immersed than the users of other two modes - dwell-time based and computer mouse. Immersion, engagement, and user-experience in general are important aspects in educational interfaces; learners engage in completing the tasks and, for example, when facing a difficult task they do not give up that easily. We also did analysis of the strategies, and we will report on those soon. We could not attend the conference, but didn’t want to disappoint eventual audience. We thus decided to send a video instead of us. " (from Romans blog)

Abstract

"The possibilities of eye-tracking technologies in educational gaming are seemingly endless. The question we need to ask is what the effects of gaze-based interaction on user experience, strategy during learning and problem solving are. In this paper we evaluate the effects of two gaze based input techniques and mouse based interaction on user experience and immersion. In a between-subject study we found that although mouse interaction is the easiest and most natural way to interact during problemsolving, gaze-based interaction brings more subjective immersion. The findings provide a support for gaze interaction methods into computer-based educational environments." Download paper as PDF.

Some of this research has also been presented within the COGAIN association, see:

- Gowases Tersia (2007) Gaze vs. Mouse: An evaluation of user experience and planning in problem solving games. Master’s thesis May 2, 2007. Department of Computer Science, University of Joensuu, Finland. Download as PDF

0

comments

Labels:

dwell time,

evaluation,

game,

navigation

Tuesday, June 3, 2008

Eye typing at the Bauhaus University of Weimar

The Psychophysiology and Perception group, part of the faculty of Media at the Bauhaus University of Weimar are conducting research on gaze based text entry. Their past research projects include the Qwerty on-screen dwell based keyboard, IWrite, pEYEWrite and StarWrite. Thanks to Mario Urbina for notification.

"Qwerty is based on dwell time selection. Here the user has to stare for 500 ms a determinate character to select it. QWERTY served us, as comparison base line for the new eye typing systems. It was implemented in C++ using QT libraries."

IWrite

"A simple way to perform a selection based on saccadic movement is to select an item by looking at it and confirm its selection by gazing towards a defined place or item. Iwrite is based on screen buttons. We implemented an outer frame as screen button. That is to say, characters are selected by gazing towards the outer frame of the application. This lets the text window in the middle of the screen for comfortable and safe text review. The order of the characters, parallel to the display borders, should reduce errors like the unintentional selection of items situated in the path as one moves across to the screen button.The strength of this interface lies on its simplicity of use. Additionally, it takes full advantage of the velocity of short saccade selection. Number and symbol entry mode was implemented for this editor in the lower frame. Iwrite was implemented in C++ using QT libraries."

StarWrite

In StarWrite, selection is also based on saccadic movements to avoid dwell times. The idea of StarWrite is to combine eye typing movements with feedback. Users, mostly novices, tend to look to the text field after each selection to check what has been written. Here letters are typed by dragging them into the text field. This provides instantaneous visual feedback and should spare checking saccades towards text field. When a character is fixated, both it and its neighbors are highlighted and enlarged in order to facilitate the character selection. In order to use x- and y-coordinates for target selection, letters were arranged alphabetically on a half-circle in the upper part of the monitor. The text window appeared in the lower field. StarWrite provides a lower case as well, upper case, and numerical entry modes, that can be switched by fixating for 500 milliseconds the corresponding buttons, situated on the lower part of the application. There are also placed the space, delete and enter keys, which are driven by a 500 ms dwell time too. StarWrite was implemented in C++ using OpenGL libraries for the visualization."

Associated publications

"Qwerty is based on dwell time selection. Here the user has to stare for 500 ms a determinate character to select it. QWERTY served us, as comparison base line for the new eye typing systems. It was implemented in C++ using QT libraries."

IWrite

"A simple way to perform a selection based on saccadic movement is to select an item by looking at it and confirm its selection by gazing towards a defined place or item. Iwrite is based on screen buttons. We implemented an outer frame as screen button. That is to say, characters are selected by gazing towards the outer frame of the application. This lets the text window in the middle of the screen for comfortable and safe text review. The order of the characters, parallel to the display borders, should reduce errors like the unintentional selection of items situated in the path as one moves across to the screen button.The strength of this interface lies on its simplicity of use. Additionally, it takes full advantage of the velocity of short saccade selection. Number and symbol entry mode was implemented for this editor in the lower frame. Iwrite was implemented in C++ using QT libraries."

PEYEWrite

"Pie menus have already been shown to be powerful menus for mouse or stylus control. They are two-dimensional, circular menus, containing menu items displayed as pie-formed slices. Finding a trade-off between user interfaces for novice and expert users is one of the main challenges in the design of an interface, especially in gaze control, as it is less conventional and utilized than input controlled by hand. One of the main advantages of pie menus is that interaction is very easy to learn. A pie menu presents items always in the same position, so users can match predetermined gestures with their corresponding actions. We therefore decided to transfer pie menus to gaze control and try it out for an eye typing approach. We designed the Pie menu for six items and two depth layers. With this configuration we can present (6 x 6) 36 items. The first layer contains groups of five letters ordered in pie slices.."

"Pie menus have already been shown to be powerful menus for mouse or stylus control. They are two-dimensional, circular menus, containing menu items displayed as pie-formed slices. Finding a trade-off between user interfaces for novice and expert users is one of the main challenges in the design of an interface, especially in gaze control, as it is less conventional and utilized than input controlled by hand. One of the main advantages of pie menus is that interaction is very easy to learn. A pie menu presents items always in the same position, so users can match predetermined gestures with their corresponding actions. We therefore decided to transfer pie menus to gaze control and try it out for an eye typing approach. We designed the Pie menu for six items and two depth layers. With this configuration we can present (6 x 6) 36 items. The first layer contains groups of five letters ordered in pie slices.."

StarWrite

In StarWrite, selection is also based on saccadic movements to avoid dwell times. The idea of StarWrite is to combine eye typing movements with feedback. Users, mostly novices, tend to look to the text field after each selection to check what has been written. Here letters are typed by dragging them into the text field. This provides instantaneous visual feedback and should spare checking saccades towards text field. When a character is fixated, both it and its neighbors are highlighted and enlarged in order to facilitate the character selection. In order to use x- and y-coordinates for target selection, letters were arranged alphabetically on a half-circle in the upper part of the monitor. The text window appeared in the lower field. StarWrite provides a lower case as well, upper case, and numerical entry modes, that can be switched by fixating for 500 milliseconds the corresponding buttons, situated on the lower part of the application. There are also placed the space, delete and enter keys, which are driven by a 500 ms dwell time too. StarWrite was implemented in C++ using OpenGL libraries for the visualization."

Associated publications

- Huckauf, A. and Urbina, M. H. 2008. Gazing with pEYEs: towards a universal input for various applications. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications (Savannah, Georgia, March 26 - 28, 2008). ETRA '08. ACM, New York, NY, 51-54. [URL] [PDF] [BIB]

- Urbina, M. H. and Huckauf, A. 2007. Dwell time free eye typing approaches. In Proceedings of the 3rd Conference on Communication by Gaze Interaction - COGAIN 2007, September 2007, Leicester, UK, 65--70. Available online at http://www.cogain.org/cogain2007/COGAIN2007Proceedings.pdf [PDF] [BIB]

- Huckauf, A. and Urbina, M. 2007. Gazing with pEYE: new concepts in eye typing. In Proceedings of the 4th Symposium on Applied Perception in Graphics and Visualization (Tubingen, Germany, July 25 - 27, 2007). APGV '07, vol. 253. ACM, New York, NY, 141-141. [URL] [PDF] [BIB]

- Urbina, M. H. and Huckauf, A. 2007. pEYEdit: Gaze-based text entry via pie menus. In Conference Abstracts. 14th European Conference on Eye Movements ECEM2007. Kliegl, R. & Brenstein, R. (Eds.) (2007), 165-165.

Wednesday, March 26, 2008

Last week of prototyping

Being back from a short easter holiday the final week of developing the prototype is here. The functionality of the interface is OK but there is major tweaking and bug testing to be performed. The deadline for any form of new features is Monday 31st of March. I intend to use all of April for setting up the evaluation experiments and procedures. As always there is so much more that I would like to incorporate, every day brings new ideas.

The final version will include:

Eight weeks so far. Curiosity, dedication and plain ol´ hard work, nothing else to it.

The final version will include:

- A configurable dwell button component. This enables drag and drop dwell buttons into Windows projects with individual configuration on dwell-time and icons etc.

- A novel gaze based menu system that utilized saccade selection (two step dwell) This highly configurable interface component displays itself when activated. It aims at solving the midas touch problem while utilizing the screen real estate in a better way. The two steps in the activation process can be set to specific activation-speeds (dwells) More on this later..

- A gaze-based memory game using a 36 card layout. By perfoming a dwell the cards "turn" over and shows its symbol (flag). The user the selects another card. If matching then remove them. If different, turn them back over. Got some nice graphical effects.

- A gaze based picture viewer that zooms into the photos in gaze of the user. More on this later.

- A gaze based media/music player. This component will scan the computer for artists, albums and songs. These items are then accessible by a gaze driven interface where the user can create play-lists and perform the usual functions (volume+-, pause, stop, next, previous etc.)

- Perhaps just one little surprise more..

Eight weeks so far. Curiosity, dedication and plain ol´ hard work, nothing else to it.

0

comments

Labels:

dwell time,

gaze memory,

interface design,

Midas Touch,

WPF

Monday, March 10, 2008

Inspiration: Dwell-Based Pointing in Applications (Muller-Tomfelde, 2007)

While researching the optimal default value for dwell time execution I stumbled upon this paper by Christian Muller-Tomfelde at the CSIRO ICT Centre, Australia. It does not concern dwell time in the aspect of gaze based interaction but instead focuses on how we handle dwell times while pointing towards objects and conveying this reference to a communication partner. How long can this information be withheld before the interaction becomes unnatural?

Abstract

"This paper describes exploratory studies and a formal experiment that investigate a particular temporal aspect of human pointing actions. Humans can express their intentions and refer to an external entity by pointing at distant objects with their fingers or a tool. The focus of this research is on the dwell time, the time span that people remain nearly motionless during pointing at objects. We address two questions: Is there a common or natural dwell time in human pointing actions? What implications does this have for Human Computer Interaction? Especially in virtual environments, feedback about the referred object is usually provided to the user to confirm actions such as object selection. A literature review and two studies led to a formal experiment in a hand-immersive virtual environment in search for an appropriate feedback delay time for dwell-based pointing actions. The results and implications for applications for Human Computer Interaction are discussed. "

Abstract

"This paper describes exploratory studies and a formal experiment that investigate a particular temporal aspect of human pointing actions. Humans can express their intentions and refer to an external entity by pointing at distant objects with their fingers or a tool. The focus of this research is on the dwell time, the time span that people remain nearly motionless during pointing at objects. We address two questions: Is there a common or natural dwell time in human pointing actions? What implications does this have for Human Computer Interaction? Especially in virtual environments, feedback about the referred object is usually provided to the user to confirm actions such as object selection. A literature review and two studies led to a formal experiment in a hand-immersive virtual environment in search for an appropriate feedback delay time for dwell-based pointing actions. The results and implications for applications for Human Computer Interaction are discussed. "

I find the part about the visual feedback experiment interesting.

"We want to test whether a variation of the delay of an explicit visual feedback for a pointing action has an effect of the perception of the interaction process. First, feedback delay time above approximately 430 ms is experienced by users to happen late. Second, for a feedback delay time above approximately 430 ms users experience waiting for feedback to happen and third, feedback delay below 430 ms is considered by users to be natural as in real life conversations. "

Questions asked:

- 1: Do you have the impression that the system feedback happened in a reasonable time according to your action? Answer: confirmation occurred too fast (1), too late (7).

- 2: Did you have the feeling to wait for the feedback to happen? Answer: no I didn’t have to wait (1), yes, I waited (7).

- 3: Did you have the impression that the time delay for the feedback was natural? (i.e., as in a real life communication situation) Answer: time delay is not natural (1), quite natural (7).

"This allows us to recommend a feedback delay time for manual pointing actions of approximately 350 to 600 ms as a starting point for the development of interactive applications. We have shown that this feedback delay is experienced by users as natural and convenient and that the majority of observers of pointing actions gave feedback within a similar time span."

0

comments

Labels:

dwell time,

hci,

inspiration

Monday, March 3, 2008

Zooming and Expanding Interfaces / Custom componenets

The inspiration I got from the reviewed papers on using a zooming interaction style to developing a set of zoom based interface components. The interaction style is suitable for gaze to overcome the inaccuracy and jitter of eye movements. My intention is that the interface components should be completely standalone, customizable and straightforward to use. Ideally included in new projects by importing one file and writing one line of code.

The first component is a dwell-based menu button that on fixation will a) provide a dwelltime indicator by animating a small glow effect surrounding the button image and b) after 200ms expand an ellipse that houses the menu options. This produces a two step dwell activation while making use of the display area in a much more dynamic way. The animation is put in place to keep the users fixation remained at the button for the duration of the dwell time. The items in the menu are displayed when the ellipse has reached its full size.

This gives the user a feedback in his parafoveal region and at the same time the glow of the button icon has stopped indicating a full dwelltime execution. (bit hard to describe in words, perhaps easier to understand from the images below) The parafoveal region of our visual field is located just outside the foveal region (where the full resolution vision takes place). The foveal area is about the size of a thumbnail on an armslengths distance, items in the parafoveal region still can be seen but the resolution/sharpness is reduced. We do see them but have to make a short saccade for them to be in full resolution. In other words the menu items pop out at a distance that attracts a short saccade which is easily discriminated by the eye tracker. (Just4fun test your visual field)

Upon fixation the button image displays an animated glow effect indicating the dwell process. The image above illustrates how the menu items pops out on the ellipse surface at the end of the dwell. Note that the ellipse grows in size during a 300ms period, exact timing is configurable by passing a parameter in the XAML design page.

Upon fixation the button image displays an animated glow effect indicating the dwell process. The image above illustrates how the menu items pops out on the ellipse surface at the end of the dwell. Note that the ellipse grows in size during a 300ms period, exact timing is configurable by passing a parameter in the XAML design page.The second prototype I have been working on is also inspired by the usage of expanding surfaces. The purpose is a gaze driven photo gallery where thumbnail sized image previews becomes enlarged upon glancing at them. The enlarged view displays an icon which can be fixated to make the photo appear in full size.

Upon glancing at the thumbnails they become enlarged which activates the icon at the bottom of each photo. This enables the user to make a second fixation on it to bring the photo into a large view. This view has to two icons to navigate back and forth (next photo). By fixating outside the photo the view goes back to the overview.

0

comments

Labels:

button,

dwell time,

feedback,

glow,

interface design,

noisy gaze data,

zoom

Wednesday, February 20, 2008

Inspiration: ZoomNavigator (Skovsgaard, 2008)

Following up on the StartGazer text entry interface presented in my previous post, another approach to using zooming interfaces is employed in the ZoomNavigator (Skovsgaard, 2008) It addresses the well known issue of using gaze as input on traditional desktop systems, namely inaccuracy and jitter. Interesting solution which relies on dwell-time execution compared to the EyePoint system (Kumar&Winograd, 2007) which is described in the next post.

Abstract

The goal of this research is to estimate the maximum amount of noise of a pointing device that still makes interaction with a Windows interface possible. This work proposes zoom as an alternative activation method to the more well-known interaction methods (dwell and two-step-dwell activation). We present a magnifier called ZoomNavigator that uses the zoom principle to interact with an interface. Selection by zooming was tested with white noise in a range of 0 to 160 pixels in radius on an eye tracker and a standard mouse. The mouse was found to be more accurate than the eye tracker. The zoom principle applied allowed successful interaction with the smallest targets found in the Windows environment even with noise up to about 80 pixels in radius. The work suggests that the zoom interaction gives the user a possibility to make corrective movement during activation time eliminating the waiting time found in all types of dwell activations. Furthermore zooming can be a promising way to compensate for inaccuracies on low-resolution eye trackers or for instance if people have problems controlling the mouse due to hand tremors.

Abstract

The goal of this research is to estimate the maximum amount of noise of a pointing device that still makes interaction with a Windows interface possible. This work proposes zoom as an alternative activation method to the more well-known interaction methods (dwell and two-step-dwell activation). We present a magnifier called ZoomNavigator that uses the zoom principle to interact with an interface. Selection by zooming was tested with white noise in a range of 0 to 160 pixels in radius on an eye tracker and a standard mouse. The mouse was found to be more accurate than the eye tracker. The zoom principle applied allowed successful interaction with the smallest targets found in the Windows environment even with noise up to about 80 pixels in radius. The work suggests that the zoom interaction gives the user a possibility to make corrective movement during activation time eliminating the waiting time found in all types of dwell activations. Furthermore zooming can be a promising way to compensate for inaccuracies on low-resolution eye trackers or for instance if people have problems controlling the mouse due to hand tremors.

The sequence of images are screenshots from ZoomNavigator showing

a zoom towards a Windows file called ZoomNavigator.exe.

The principles of ZoomNavigator are shown in the figure above. Zooming is used to focus on the attended object and eventually make a selection (unambiguous action). ZoomNavigator allows actions similar to those found in a conventional mouse. (Skovsgaard, 2008) The system is described in a conference paper titled "Estimating acceptable noise-levels on gaze and mouse selection by zooming" Download paper (pdf)

Two-step zoom

The two-step zoom activation is demonstrated in the video below by IT University of Copenhagen (ITU) research director prof. John Paulin Hansen. Notice how the error rate is reduced by the zooming style of interaction, making it suitable for applications with need for detailed discrimination. It might be slower but error rates drops significantly.

"Dwell is the traditional way of making selections by gaze. In the video we compare dwell to magnification and zoom. While the hit-rate is 10 % with dwell on a 12 x 12 pixels target, it is 100 % for both magnification and zoom. Magnification is a two-step process though, while zoom only takes on selection. In the experiment, the initiation of a selection is done by pressing the spacebar. Normally, the gaze tracking system will do this automatically when the gaze remains within a limited area for more than approx. 100 ms"

For more information see the publications of the ITU.

Two-step zoom

The two-step zoom activation is demonstrated in the video below by IT University of Copenhagen (ITU) research director prof. John Paulin Hansen. Notice how the error rate is reduced by the zooming style of interaction, making it suitable for applications with need for detailed discrimination. It might be slower but error rates drops significantly.

"Dwell is the traditional way of making selections by gaze. In the video we compare dwell to magnification and zoom. While the hit-rate is 10 % with dwell on a 12 x 12 pixels target, it is 100 % for both magnification and zoom. Magnification is a two-step process though, while zoom only takes on selection. In the experiment, the initiation of a selection is done by pressing the spacebar. Normally, the gaze tracking system will do this automatically when the gaze remains within a limited area for more than approx. 100 ms"

For more information see the publications of the ITU.

Thursday, February 7, 2008

Midas touch, Dwell time & WPF Custom controls

How do you make a distinction between users glancing at objects and fixations with the intention to start a function? This is the well known problem usually referred to as the "Midas touch problem". The interfaces that rely on gaze as the only means input must support this style of interaction and be capable of making distinction between glances when the user is just looking around and fixations with the intention to start a function.

There are some solutions to this. Frequently occurring is the concept of "dwell-time" where you can activate functions simply by a prolonged fixation of an icon/button/image. Usually in the range of 4-500ms or so. This is a common solution when developing gaze interfaces for assistive technology for users suffering from Amyotrophic lateral sclerosis (ALS) or other "paralyzing" conditions where no other modality the gaze input can be used. It does come with some issues, the prolonged fixation means that the interaction is slower since the user has to sit through the dwell-time but mainly it adds stress to the interaction since everywhere you look seems to activate a function.

As part of my intention to develop a set of components for the interface a dwell-based interaction style should be supported. It may not be the best method but I do wish to have it in the drawer just to have the opportunity to evaluate it and perform experiments with it.

The solution I´ve started working on goes something like this; upon a fixation on the object an event is fired. The event launches a new thread which aims at determining if the fixation is within the area long enough for it to be considered to be a dwell (the gaze data is noisy) Half way through it measures if the area have received enough fixations to continue, otherwise aborts the thread. At the end it measures if fixations have resided within the area for more than 70% of the time, in that case, it activates the function.

Working with threads can be somewhat tricky and a some time for tweaking remains. In addition getting the interaction to feel right and suitable feedback is important. I'm investigating means of a) providing feedback on which object is selected b) indications that the dwell process has started and its state. c) animations to help the fixation to remain in the center of the object.

--------------------------------------------------------------

Coding> Windows Presentation Foundation and Custom Controls

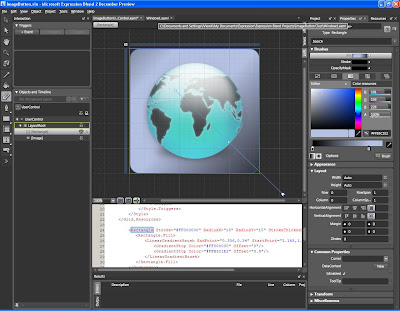

Other progress has been made in learning Windows Presentation Foundation (WPF) user interface development. The Microsoft Expression Blend is a tool that enables a graphical design of components. By creating generic objects such as gaze-buttons the overall process will benefit in the longer run. Instead of having all of the objects defined in a single file it is possible to break it into separate projects and later just include the component DLL file and use one line of code to include it in the design.

Additional styling and modification on the objects size can then be performed as if it were a default button. Furthermore, by creating dependency properties in the C# code behind each control/component specific functionality can be easily accessed from the main XAML design layout. It does take a bit longer to develop but will be worth it tenfold later on. More on this further on.

There are some solutions to this. Frequently occurring is the concept of "dwell-time" where you can activate functions simply by a prolonged fixation of an icon/button/image. Usually in the range of 4-500ms or so. This is a common solution when developing gaze interfaces for assistive technology for users suffering from Amyotrophic lateral sclerosis (ALS) or other "paralyzing" conditions where no other modality the gaze input can be used. It does come with some issues, the prolonged fixation means that the interaction is slower since the user has to sit through the dwell-time but mainly it adds stress to the interaction since everywhere you look seems to activate a function.

As part of my intention to develop a set of components for the interface a dwell-based interaction style should be supported. It may not be the best method but I do wish to have it in the drawer just to have the opportunity to evaluate it and perform experiments with it.

The solution I´ve started working on goes something like this; upon a fixation on the object an event is fired. The event launches a new thread which aims at determining if the fixation is within the area long enough for it to be considered to be a dwell (the gaze data is noisy) Half way through it measures if the area have received enough fixations to continue, otherwise aborts the thread. At the end it measures if fixations have resided within the area for more than 70% of the time, in that case, it activates the function.

Working with threads can be somewhat tricky and a some time for tweaking remains. In addition getting the interaction to feel right and suitable feedback is important. I'm investigating means of a) providing feedback on which object is selected b) indications that the dwell process has started and its state. c) animations to help the fixation to remain in the center of the object.

--------------------------------------------------------------

Coding> Windows Presentation Foundation and Custom Controls

Other progress has been made in learning Windows Presentation Foundation (WPF) user interface development. The Microsoft Expression Blend is a tool that enables a graphical design of components. By creating generic objects such as gaze-buttons the overall process will benefit in the longer run. Instead of having all of the objects defined in a single file it is possible to break it into separate projects and later just include the component DLL file and use one line of code to include it in the design.

Additional styling and modification on the objects size can then be performed as if it were a default button. Furthermore, by creating dependency properties in the C# code behind each control/component specific functionality can be easily accessed from the main XAML design layout. It does take a bit longer to develop but will be worth it tenfold later on. More on this further on.

Microsoft Expression Blend. Good companion for WPF and XAML development.

Microsoft Expression Blend. Good companion for WPF and XAML development.Windows Presentation Foundation (WPF) has proven to be more flexible and useful than it seemed at fist glance. You can really do complex things swiftly with it. The following links have proven to be great resources for learning more about WPF.

http://www.contentpresenter.com and http://www.thewpfblog.com

>Kirupa Chinnathambi, introduction to WPF, Blend and a nice blog.http://www.kirupa.com/blend_wpf/index.htm and http://blog.kirupa.com/

Microsoft MIX07, 72 hours of talks about the latest tools and tricks.http://sessions.visitmix.com/

0

comments

Labels:

Blender,

dwell time,

Midas Touch,

WPF

Subscribe to:

Posts (Atom)