Monday, May 9, 2011

"Read my Eyes" - A presentation of the ITU Gaze Tracker

Monday, November 1, 2010

ScanMatch: A novel method for comparing fixation sequences (Cristino et al, 2010)

Abstract

We present a novel approach to comparing saccadic eye movement sequences based on the Needleman–Wunsch algorithm used in bioinformatics to compare DNA sequences. In the proposed method, the saccade sequence is spatially and temporally binned and then recoded to create a sequence of letters that retains fixation location, time, and order information. The comparison of two letter sequences is made by maximizing the similarity score computed from a substitution matrix that provides the score for all letter pair substitutions and a penalty gap. The substitution matrix provides a meaningful link between each location coded by the individual letters. This link could be distance but could also encode any useful dimension, including perceptual or semantic space. We show, by using synthetic and behavioral data, the benefits of this method over existing methods. The ScanMatch toolbox for MATLAB is freely available online (www.scanmatch.co.uk).

- Filipe Cristino, Sebastiaan Mathôt, Jan Theeuwes, and Iain D. Gilchrist

ScanMatch: A novel method for comparing fixation sequences

Behav Res Methods 2010 42:692-700; doi:10.3758/BRM.42.3.692

Abstract Full Text (PDF) References

Thursday, October 28, 2010

Gaze Tracker 2.0 Preview

HD video available (click 360p and select 720p)

Tuesday, August 17, 2010

How to build low cost eye tracking glasses for head mounted system (M. Kowalik, 2010)

Download instructions as PDF (8.1Mb)

Wednesday, April 14, 2010

Open-source gaze tracker awarded Research Pearls of ITU Copenhagen

"The Open-Source ITU Gaze Tracker"

Abstract:

Gaze tracking offers them the possibility of interacting with a computer by just using eye movements, thereby making users more independent. However, some people (for example users with a severe disability) are excluded from access to gaze interaction due to the high prices of commercial systems (above 10.000€). Gaze tracking systems built from low-cost and off-the-shelf components have the potential of facilitating access to the technology and bring prices down.

The ITU Gaze Tracker is an off-the-shelf system that uses an inexpensive web cam or a video camera to track the user’s eye. It is free and open-source, offering users the possibility of trying out gaze interaction technology for a cost as low as 20€, and to adapt and extend the software to suit specific needs.

In this talk we will present the open-source ITU Gaze Tracker and show the different scenarios in which the system has been used and evaluated.

Friday, December 11, 2009

PhD Defense: Off-the-Shelf Gaze Interaction

Javier San Agustin will defend his PhD thesis on "Off-the-Shelf Gaze Interaction" at the IT University of Copenhagen on the 8th of January from 13.00 to (at most) 17.00. The program for the event consists of a one hour presentation which is followed by a discussion with the committee, formed by Andrew Duchowski, Bjarne Kjær Ersbøll, and Arne John Glenstrup. Whereby a traditional reception with snacks and drinks will be held.

Javier San Agustin will defend his PhD thesis on "Off-the-Shelf Gaze Interaction" at the IT University of Copenhagen on the 8th of January from 13.00 to (at most) 17.00. The program for the event consists of a one hour presentation which is followed by a discussion with the committee, formed by Andrew Duchowski, Bjarne Kjær Ersbøll, and Arne John Glenstrup. Whereby a traditional reception with snacks and drinks will be held.Update: The thesis is now available as PDF, 179 pages, 3.6MB.

Abstract of the thesis:

People with severe motor-skill disabilities are often unable to use standard input devices such as a mouse or a keyboard to control a computer and they are, therefore, in strong need for alternative input devices. Gaze tracking offers them the possibility to use the movements of their eyes to interact with a computer, thereby making them more independent. A big effort has been put toward improving the robustness and accuracy of the technology, and many commercial systems are nowadays available in the market.

Despite the great improvements that gaze tracking systems have undergone in the last years, high prices have prevented gaze interaction from becoming mainstream. The use of specialized hardware, such as industrial cameras or infrared light sources, increases the accuracy of the systems, but also the price, which prevents many potential users from having access to the technology. Furthermore, the different components are often required to be placed in specific locations, or are built into the monitor, thus decreasing the flexibility of the setup.

Gaze tracking systems built from low-cost and off-the-shelf components have the potential to facilitate access to the technology and bring the prices down. Such systems are often more flexible, as the components can be placed in different locations, but also less robust, due to the lack of control over the hardware setup and the lower quality of the components compared to commercial systems.

The work developed for this thesis deals with some of the challenges introduced by the use of low-cost and off-the-shelf components for gaze interaction. The main contributions are:

- Development and performance evaluation of the ITU Gaze Tracker, an off-the-shelf gaze tracker that uses an inexpensive webcam or video camera to track the user's eye. The software is readily available as open source, offering the possibility to try out gaze interaction for a low price and to analyze, improve and extend the software by modifying the source code.

- A novel gaze estimation method based on homographic mappings between planes. No knowledge about the hardware configuration is required, allowing for a flexible setup where camera and light sources can be placed at any location.

- A novel algorithm to detect the type of movement that the eye is performing, i.e. fixation, saccade or smooth pursuit. The algorithm is based on eye velocity and movement pattern, and allows to smooth the signal appropriately for each kind of movement to remove jitter due to noise while maximizing responsiveness.

Friday, September 18, 2009

The EyeWriter project

The Eyewriter from Evan Roth on Vimeo.

eyewriter tracking software walkthrough from thesystemis on Vimeo.

More information is found at http://fffff.at/eyewriter/

Tuesday, August 11, 2009

ALS Society of British Columbia announces Engineering Design Awards (Canadian students only)

"The ALS Society of British Columbia has established three Awards to encourage and recognize innovation in technology to substantially improve the quality of life of people living with ALS (Amyotrophic Lateral Sclerosis, also known as Lou Gehrig’s Disease). Students at the undergraduate or graduate level in engineering or a related discipline at a post-secondary institution in British Columbia or elsewhere in Canada are eligible for the Awards. Students may be considered individually or as a team. Mentor Awards may also be given to faculty supervising students who win awards" (see Announcement)

"The ALS Society of British Columbia has established three Awards to encourage and recognize innovation in technology to substantially improve the quality of life of people living with ALS (Amyotrophic Lateral Sclerosis, also known as Lou Gehrig’s Disease). Students at the undergraduate or graduate level in engineering or a related discipline at a post-secondary institution in British Columbia or elsewhere in Canada are eligible for the Awards. Students may be considered individually or as a team. Mentor Awards may also be given to faculty supervising students who win awards" (see Announcement)Project ideas:

- Low-cost eye tracker

- Issue: Current commercial eye-gaze tracking systems cost thousands to tens of thousands of dollars. The high cost of eye-gaze trackers prevents potential users from accessing eye- gaze tracking tools. The hardware components required for eye-gaze tracking do not justify the price and a lower-cost alternative is desirable. Webcams may be used for low-cost imaging, along with simple infrared diodes for system lighting. Alternatively, visible light systems may also be investigated. Opensource eye-gaze tracking software is also available. (ed: ITU GazeTracker, OpenEyes, Track Eye, OpenGazer and MyEye (free, no source)

- Goal: The goal of this design project is to develop a low-cost and usable eye-gaze tracking system based on simple commercial-of-the-shelf hardware.

- Deliverables: A working prototype of a functional, low-cost (< $200), eye-gaze tracking system.

- Issue: Current commercial eye-gaze tracking systems cost thousands to tens of thousands of dollars. The high cost of eye-gaze trackers prevents potential users from accessing eye- gaze tracking tools. The hardware components required for eye-gaze tracking do not justify the price and a lower-cost alternative is desirable. Webcams may be used for low-cost imaging, along with simple infrared diodes for system lighting. Alternatively, visible light systems may also be investigated. Opensource eye-gaze tracking software is also available. (ed: ITU GazeTracker, OpenEyes, Track Eye, OpenGazer and MyEye (free, no source)

- Eye-glasses compensation

- Deliverables: A working prototype of a functional, low-cost (< $200), eye-gaze tracking system

- Issue: The use of eye-glasses can cause considerable problems in eye-gaze tracking. The issue stems from reflections off the eye-glasses due to the use of controlled infrared lighting (on and off axis light sources) used to highlight features of the face. The key features of interest are the pupils and glints (or reflections of the surface of the cornea). Incorrectly identifying the pupils and glints then results in invalid estimation of the point-of-gaze.

- Goal: The goal of this design project is to develop techniques for either: 1) avoiding image corruption with eye-glasses on a commercial eye-gaze tracker, or 2) developing a controlled lighting scheme to ensure valid pupil and glints identification are identified in the presence of eye-glasses.

- Deliverables: Two forms of deliverables are possible: 1) A working prototype illustrating functional eye-gaze tracking in the presence of eye-glasses with a commercial eye-gaze tracker, or 2) A working prototype illustrating accurate real-time identification of the pupil and glints using controlled infrared lighting (on and off axis light sources) in the presence of eye-glasses.

- Innovative selection with ALS and eye gaze

- Issue: As mobility steadily decreases in the more advanced stages of ALS, alternative techniques for selection are required. Current solutions include head switches, sip and puff switches and dwell time activation depending on the degree of mobility loss to name a few. The use of dwell time requires no mobility other than eye motion, however, this technique suffers from ‘lag’ in that the user must wait the dwell time duration for each selection, as well as the ‘midas touch’ problem in which unintended selection if the gaze point is stationary for too long.

- Goal: The goal of this design project is to develop a technique for improved selection with eye-gaze for individuals with only eye-motion available. Possible solutions may involve novel HCI designs for interaction, including various adaptive and predictive technologies, the consideration of contextual cues, and the introduction of ancillary inputs, such as EMG, EEG.

- Deliverables: A working prototype illustrating eye-motion only selection with a commercial eye-gaze tracking system.

- Novel and valuable eye-gaze tracking applications and application enhancements

- Issue: To date, relatively few gaze-tracking applications have been developed. These include relatively simplistic applications such as the tedious typing of words, and even in such systems, little is done to ease the effort required, e.g., systems typically do not allow for the saving and reuse of words and sentences.

- Goal: The goal of this design project is to develop one or more novel applications or application enhancements that take gaze as input, and that provide new efficiencies or capabilities that could significantly improve the quality of life of those living with ALS.

- Deliverables: A working prototype illustrating one or more novel applications that take eye-motion as an input. The prototype must be developed and implemented to the extent that an evaluation of the potential efficiencies and/or reductions in effort can be evaluated by persons living with ALS and others on an evaluation panel.

See the Project Ideas for more information. For contact information see page two of the announcement.

Friday, April 17, 2009

IDG Interview with Javier San Agustin

Hopefully, ideas and contributions to platform through the community makes the platform take off. Considering the initial release to be a Beta version, there are of course additional improvements to make. Additional cameras needs to be verified and bugs in code to be handled.

If you experience any issues or have ideas for improvements please post at http://forum.gazegroup.org

Sunday, April 5, 2009

Introducing the ITU GazeTracker

- Supports head mounted and remote setups

- Tracks both pupil and glints

- Supports a wide variety of camera devices

- Configurable calibration

- Eye-mouse capabilities

- UDPServer broadcasting gaze data

- Full source code provided

Visit the ITU GazeGroup to download the software package. Please get in touch with us at http://forum.gazegroup.org

Tuesday, March 31, 2009

Radio interview with DR1

Tuesday, July 15, 2008

Sebastian Hillaire at IRISA Rennes, France

Automatic, Real-Time, Depth-of-Field Blur Effect for First-Person Navigation in Virtual Environment (2008)

"We studied the use of visual blur effects for first-person navigation in virtual environments. First, we introduce new techniques to improve real-time Depth-of-Field blur rendering: a novel blur computation based on the GPU, an auto-focus zone to automatically compute the user’s focal distance without an eye-tracking system, and a temporal filtering that simulates the accommodation phenomenon. Secondly, using an eye-tracking system, we analyzed users’ focus point during first-person navigation in order to set the parameters of our algorithm. Lastly, we report on an experiment conducted to study the influence of our blur effects on performance and subjective preference of first-person shooter gamers. Our results suggest that our blur effects could improve fun or realism of rendering, making them suitable for video gamers, depending however on their level of expertise."

- Sébastien Hillaire, Anatole Lécuyer, Rémi Cozot, Géry Casiez

Automatic, Real-Time, Depth-of-Field Blur Effect for First-Person Navigation in Virtual Environment. To appear in IEEE Computer Graphics and Application (CG&A), 2008 , pp. ??-?? Source code (please refer to my IEEE VR 2008 publication)

"We describes the use of user’s focus point to improve some visual effects in virtual environments (VE). First, we describe how to retrieve user’s focus point in the 3D VE using an eye-tracking system. Then, we propose the adaptation of two rendering techniques which aim at improving users’ sensations during first-person navigation in VE using his/her focus point: (1) a camera motion which simulates eyes movement when walking, i.e., corresponding to vestibulo-ocular and vestibulocollic reflexes when the eyes compensate body and head movements in order to maintain gaze on a specific target, and (2) a Depth-of-Field (DoF) blur effect which simulates the fact that humans perceive sharp objects only within some range of distances around the focal distance.

Second, we describe the results of an experiment conducted to study users’ subjective preferences concerning these visual effects during first-person navigation in VE. It showed that participants globally preferred the use of these effects when they are dynamically adapted to the focus point in the VE. Taken together, our results suggest that the use of visual effects exploiting users’ focus point could be used in several VR applications involving firstperson navigation such as the visit of architectural site, training simulations, video games, etc."

Sébastien Hillaire, Anatole Lécuyer, Rémi Cozot, Géry Casiez

Using an Eye-Tracking System to Improve Depth-of-Field Blur Effects and Camera Motions in Virtual Environments. Proceedings of IEEE Virtual Reality (VR) Reno, Nevada, USA, 2008, pp. 47-51. Download paper as PDF.

QuakeIII DoF&Cam sources (depth-of-field, auto-focus zone and camera motion algorithms are under GPL with APP protection)

Tuesday, June 17, 2008

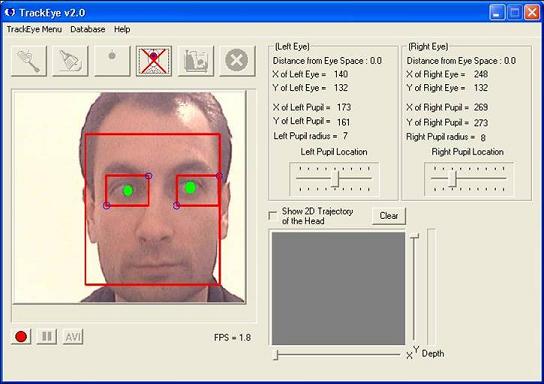

Open Source Eye Tracking

Download the executable as well as the source code. See the extensive documentation.

You will also need to download the OpenCV library.

"The purpose of the project is to implement a real-time eye-feature tracker with the following capabilities:

- RealTime face tracking with scale and rotation invariance

- Tracking the eye areas individually

- Tracking eye features

- Eye gaze direction finding

- Remote controlling using eye movements

The implemented project is on three components:

- Face detection: Performs scale invariant face detection

- Eye detection: Both eyes are detected as a result of this step

- Eye feature extraction: Features of eyes are extracted at the end of this step

Two different methods for face detection were implemented in the project:

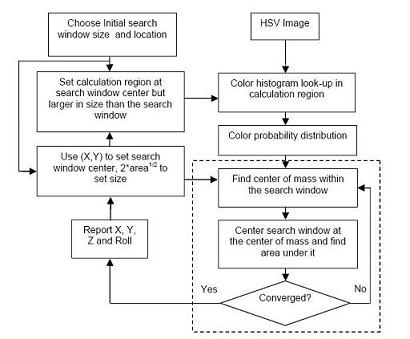

- Continuously Adaptive Means-Shift Algorithm

- Haar Face Detection method

Two different methods of eye tracking were implemented in the project:

- Template-Matching

- Adaptive

EigenEyeMethod