Purpose: Conventional computer-assisted detection CADe systems in screening mammography provide the same decision support to all users. The aim of this study was to investigate the potential of a context-sensitive CADe system which provides decision support guided by each user’s focus of attention during visual search and reporting patterns for a specific case.

Methods: An observer study for the detection of malignant masses in screening mammograms was conducted in which six radiologists evaluated 20 mammograms while wearing an eye-tracking device. Eye-position data and diagnostic decisions were collected for each radiologist and case they reviewed. These cases were subsequently analyzed with an in-house knowledge-based CADe system using two different modes: conventional mode with a globally fixed decision threshold and context-sensitive mode with a location-variable decision threshold based on the radiologists’ eye dwelling data and reporting information.

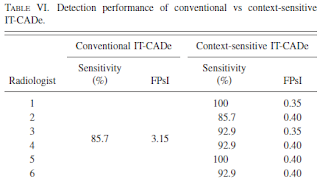

Results: The CADe system operating in conventional mode had 85.7% per-image malignant mass sensitivity at 3.15 false positives per image FPsI . The same system operating in context-sensitive mode provided personalized decision support at 85.7%–100% sensitivity and 0.35–0.40 FPsI to all six radiologists. Furthermore, context-sensitive CADe system could improve the radiologists’ sensitivity and reduce their performance gap more effectively than conventional CADe.