How do you make a distinction between users glancing at objects and fixations with the intention to start a function? This is the well known problem usually referred to as the "

Midas touch problem". The interfaces that rely on gaze as the only means input must support this style of interaction and be capable of making distinction between glances when the user is just looking around and fixations with the intention to start a function.

There are some solutions to this. Frequently occurring is the concept of "dwell-time" where you can activate functions simply by a prolonged fixation of an icon/button/image. Usually in the range of 4-500ms or so. This is a common solution when developing gaze interfaces for

assistive technology for users suffering from

Amyotrophic lateral sclerosis (ALS) or other "paralyzing" conditions where no other modality the gaze input can be used. It does come with some issues, the prolonged

fixation means that the interaction is slower since the user has to sit through the dwell-time but mainly it adds stress to the interaction since everywhere you look seems to activate a function.

As part of my intention to develop a set of components for the interface a dwell-based interaction style should be supported. It may not be the best method but I do wish to have it in the drawer just to have the opportunity to evaluate it and perform experiments with it.

The solution I´ve started working on goes something like this; upon a fixation on the object an event is fired. The event launches a new thread which aims at determining if the fixation is within the area long enough for it to be considered to be a dwell (the gaze data is noisy) Half way through it measures if the area have received enough fixations to continue, otherwise aborts the thread. At the end it measures if fixations have resided within the area for more than 70% of the time, in that case, it activates the function.

Working with threads can be somewhat tricky and a some time for tweaking remains. In addition getting the interaction to feel right and suitable feedback is important. I'm investigating means of a) providing feedback on which object is selected b) indications that the dwell process has started and its state. c) animations to help the fixation to remain in the center of the object.

--------------------------------------------------------------

Coding> Windows Presentation Foundation and Custom Controls

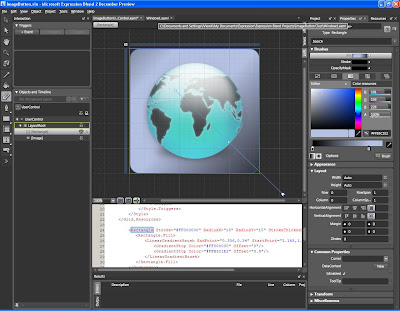

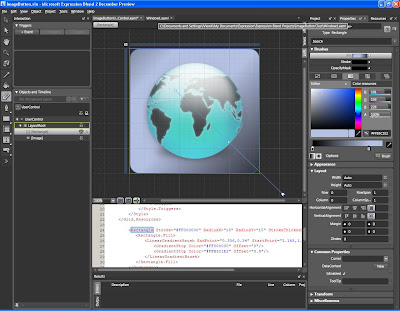

Other progress has been made in learning

Windows Presentation Foundation (WPF) user interface development. The

Microsoft Expression Blend is a tool that enables a graphical design of components. By creating generic objects such as gaze-buttons the overall process will benefit in the longer run. Instead of having all of the objects defined in a single file it is possible to break it into separate projects and later just include the component

DLL file and use one line of code to include it in the design.

Additional

styling and modification on the objects size can then be performed as if it were a default button. Furthermore, by creating dependency properties in the

C# code behind each control/component specific functionality can be easily accessed from the main

XAML design layout. It does take a bit longer to develop but will be worth it tenfold later on. More on this further on.

Microsoft Expression Blend. Good companion for WPF and XAML development.Windows Presentation Foundation (WPF)

Microsoft Expression Blend. Good companion for WPF and XAML development.Windows Presentation Foundation (WPF) has proven to be more flexible and useful than it seemed at fist glance. You can really do complex things swiftly with it. The following links have proven to be great resources for learning more about WPF.

Lee Brimelow, experienced developer who took part in the new Yahoo Messenger client. Excellent screencasts that takes you through the development process.

http://www.contentpresenter.com and

http://www.thewpfblog.com>Kirupa Chinnathambi, introduction to WPF, Blend and a nice blog.

http://www.kirupa.com/blend_wpf/index.htm and

http://blog.kirupa.com/Microsoft MIX07, 72 hours of talks about the latest tools and tricks.

http://sessions.visitmix.com/