The Eye Tracking Device (ETD) is used to determine the influence of prolonged microgravity and the accompanying vestibular (inner ear) adaptation on the orientation of Listings Plane (a coordinate framework, which is used to define the movement of the eyes in the head).

"The working hypothesis is that in microgravity the orientation of Listings Plane is altered, probably to a small and individually variable degree. Further, with the loss of the

otolith-mediated gravitational reference, it is expected that changes in the orientation of the coordinate framework of the vestibular system occur, and thus a divergence between Listing?s Plane and the vestibular coordinate frame should be observed. While earlier ground-based experiments indicate that Listing?s Plane itself is to a small degree dependent on the pitch orientation to gravity, there is more compelling evidence of an alteration of the orientation of the

vestibulo-ocular reflex (

VOR), reflex eye movement that stabilizes images on the retina during head movement by producing an eye movement in the direction opposite to head movement, thus preserving the image on the center of the visual field, in microgravity.

Furthermore, changes in bodily function with relation to eye movement and spatial orientation that occur during prolonged disturbance of the vestibular system most likely play a major role in the problems with balance that astronauts experience following re-entry from space.

In view of the much larger living and working space in the

ISS, and the extended program of spacewalks (

EVAs) being planned, particular care must be given to assessing the reliability of functions related to eye movement and spatial orientation.

The performance of the experiments in space are therefore of interest for their expected contribution to basic research knowledge and to the improvement and assurance of human performance under weightless conditions."

NASA Image: ISS011E13710 - Cosmonaut Sergei K. Krikalev, Expedition 11 Commander representing Russia's Federal Space Agency, uses the Eye Tracking Device (ETD), a European Space Agency (ESA) payload in the Zvezda Service Module of the International Space Station. The ETD measures eye and head movements in space with great accuracy and precision.

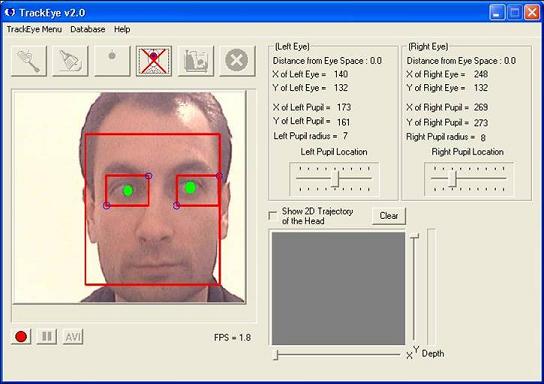

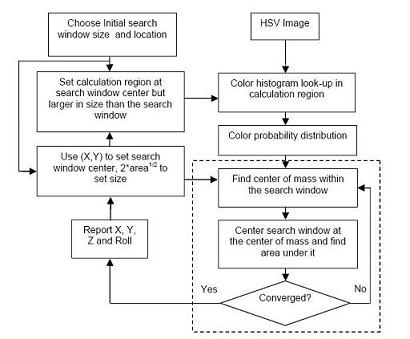

NASA Image: ISS011E13710 - Cosmonaut Sergei K. Krikalev, Expedition 11 Commander representing Russia's Federal Space Agency, uses the Eye Tracking Device (ETD), a European Space Agency (ESA) payload in the Zvezda Service Module of the International Space Station. The ETD measures eye and head movements in space with great accuracy and precision."The ETD consists of a headset that includes two digital camera modules for binocular recording of horizontal, vertical and rotational eye movements and sensors to measure head movement. The second ETD component is a laptop PC, which permits digital storage of all image sequences and data for subsequent laboratory analysis. Listing's Plane can be examined fairly simply, provided accurate three-dimensional eye-in-head measurements can be made. Identical experimental protocols will be performed during the

pre-flight, in-flight and post-flight periods of the mission. Accurate three-dimensional eye-in-head measurements are essential to the success of this experiment. The required measurement specifications (less than 0.1 degrees spatial resolution, 200 Hz sampling frequency) are fulfilled by the Eye Tracking Device (ETD)."

More information:

http://www.nasa.gov/mission_pages/station/science/experiments/ETD.htmlhttp://www.spaceflight.esa.int/delta/documents/factsheet-delta-hp-etd.pdf

http://www.energia.ru/eng/iss/researches/medic-65.html