SMI and

Lund University Humanities Lab in Lund, Sweden, will realize the 1st

Lund Eye Tracking Academy (LETA). Hosted by

Kenneth Holmqvist and

his team, the course will take place on June 11-13, 2008.

"We have decided to start our "eye-tracking academy" to help students, researchers and labs who start with eye-tracking to get up and running, to give them a flying start. Although eye-tracking research can be both fascinating and extremely useful, doing good eye-tracking research requires a certain minimum knowledge. Without having a basic understanding of what can be done with an eye-tracker, how to design an experiment and how to analyse the data, the whole study runs of risk of just producing a lot data which cannot really answer any questions.

Currently, to our knowledge, there exists no recurring and open course in eye-tracking in Europe outside Lund and everybody is trained on a person-to-person level in the existing larger research labs. In Lund, we already hold a 10 week, 7.5 ECTS Master-level course in eye-tracking. Now we offer another course, an intensive 3 day course, open to all interested in eye-tracking, who want to get a flying start and acquire some basic understanding of how to run a scientifically sound eye-tracking experiment and get high quality data that they know how to analyze.

This training course is open for all researchers and investigators just before or in the early phases of using eye-tracking, and for users wanting to refresh their knowledge of their system. It is open for attendees from universities as well as from industry. As part of the course, attendees will work hands-on with sample experiments, from experimental design to data analysis. Participants will train on state-of-the-art SMI eye-tracking systems, but the course is largely hardware independent and open to users of other systems."

Scheduled dates11-13 June, 2008. Starting at 09:00 in the morning and ending the last day at around 16:00.

Course contents• Pro and cons of headmounted, remote and contact eye-trackers.

• High sampling speed and detailed precision – who needs it?

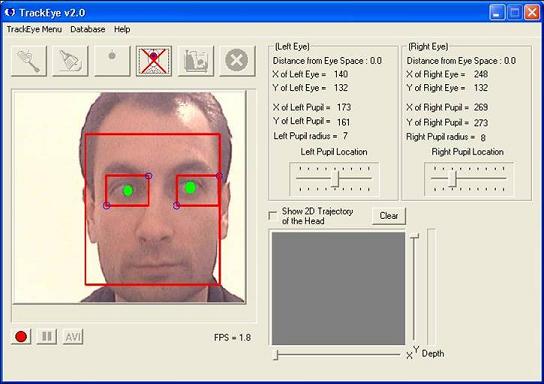

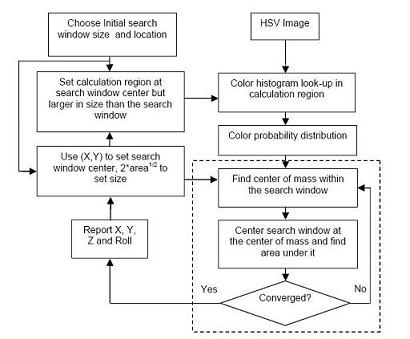

• Gaze-overlaid videos vs datafiles – what can you do with them?

• How to set up and calibrate on a variety of subjects on different eye-trackers?

• Glasses, lenses, mascara, and drooping eye-lids – what to do?

• How to work with stimulus programs, and synchronize them with eye-tracking recording?

• How to deal with the consent forms and ethical issues?

• Short introduction to experimental design: Potentials and pitfalls.

• Visualisation of data vs number crunching.

• Fast data analysis of multi-user experiments.

• Fixation durations, saccadic amplitudes, transition diagrams, group similarity measures, and all the other measures – what do they tell us? What are the pitfalls?

Teaching methodsLectures on selected topics (8h)

Hands-on work in our lab on prespecified experiments: Receiving and recording on a subject (9h). Handson initial data analysis (3h).

Eye-tracking systems available for this training2*SMI HED 50 Hz with Polhemus Head-tracking

3*SMI HiSpeed 240/1250 Hz

SMI RED-X remote 50 Hz

2*SMI HED-mobile 50/200 Hz

Attendance fee€750 incl course material, diploma and lunches if you register before June 5th.

We will only run the course if we get enough participants.

Register online.

The course content is equivalent to 1 ECTS credit at Master's level or above, although we cannot currently provide official registration at Lund University for this credit.